Create Inventory of Existing Log Analytics Workspaces

- Shannon

- Mar 14, 2021

- 5 min read

Seeing cloud adoption gain traction over the years has always put a smile on my face. Customers have made some successful migrations and transitions into a cloud based world. Some of the initial sides of adoption have caused some pain after the fact with sprawling environments, no governance, and no control over what's been deployed. Optimization is now key, but it's hard. In my first blog post of this series, I talked about the right design for Log Analytics workspaces. But what about customers that implemented Log Analytics many years ago and have a number of different workspaces? What is involved with the consolidation and optimization effort?

First off, you will want to start by figuring out what you have related to Log Analytics workspaces. There are some components you will need to examine as you consolidate and streamline existing deployments.

You will first want to check the SKU. On April 2, 2018, the Log Analytics default SKU changed to per GB. What this means is any newly onboarded Log Analytics workspace will use consumption-based pricing vs. per node pricing (or free pricing). Many customers have pre-April 2018 workspaces still in existence. Customers can continue to use those workspaces or move existing workspaces to a new pricing model for consistency.

Next you will want to see if the workspaces are using "enable log access using only resource permissions." As I covered in my last post, this model provides a way for business units to only see logs generated by their resources in a central Log Analytics workspace deployment. When I worked with customers more closely, there used to be a myriad of different workspaces deployed. So something like 1 workspace for security, 1 workspace for the middleware team, 1 workspace for operations, etc. This newer permissions model allows for 1 workspace and provides permissions to resources that only certain groups need to see (so a user would not see all logs in the workspace).

For consistency, you will probably want to switch older Log Analytics workspaces to the per GB model and enable log access using only resource permissions. Why? Well cloud requires scale and consistency. Having outliers may be fine now, but optimization and clean up is part of the burden of responsibility. Going through this exercise now will help with predictability and design on into the future. I'll even help by providing a few scripts over the course of 1-2 more blog posts in to the future so you can make use of these to clean up what you may have deployed.

I tend to hang out in PowerShell too much, so my solution will sit in that space (apologies in advance). If you're better with Python or the AZ-CLI, I'm pretty positive you can write a script that does the same thing my scripts do. Also, if you are open to collaborating on a post, drop me a comment. I'd love to work with you on creating something that's a bit more open source and less PowerShell-y.

Start out by either using the Azure Cloud Shell or initiating an authenticated remote PowerShell session to Azure from your local machine. You may have quite a number of subscriptions within your Azure world. Let's grab all subscriptions, loop through each subscription, and get a readout on all workspaces that may belong to you in your environment. This will help you figure out what you already have deployed.

In order to effectively grab everything that lives in all your subscriptions, Azure Resource Graph is the right tool to lean on. Using Azure Resource Graph provides "efficient and performant resource exploration with the ability to query at scale across a given set of subscriptions so that you can effectively govern your environment." I personally love Azure Resource Graph because of the ability I have in querying resources and extracting + sorting what I need to make the right decisions about resources within my environment.

The cmdlet I'll be focused on is the Search-AzGraph query. As I built out the script I'll share at the end, I had to do some exploratory searching so I knew what I was dealing with. Search-AzGraph requires a -Query parameter and the query itself is similar to the Kusto Query Language. I started by looking over an example of how to use Search-AzGraph with a query parameter. In looking at the example linked above, Microsoft.Compute/virtualMachines is the resource provider (Microsoft.Compute) and the resource type is virtual machines. Resource providers support creation, alteration after deployment, and deletion of Azure resources. Log Analytics workspaces exist within the Microsoft.OperationalInsights resource provider.

I began by typing in the following query:

SUCCESS! I received all Log Analytics workspaces within each subscription I had permissions to view. I received a number of responses and the actual output looks similar to what is shown below (note, I'm only showing 1 full response of the many Log Analytics workspaces I have access to):

In terms of creating the inventory and understanding what's deployed, I decided to store all the Log Analytics workspaces as an variable array and see how to extract information about the SKU and the permissions model. I decided to use $workspaces as the variable for the next cmdlet I ran:

If you look at the output from running $workspaces on its own, it will be easy to extract name, location, and resource group using dotted notation. To ensure everything was declared correctly, I ran the next 3 cmdlets to verify I would be capturing the right data.

SUCCESS! Each of these fields will be a column in the final report. The SKU and permissions model are contained within properties. I discovered this by looking at the output from an earlier command. So I ran the following cmdlet to see what showed up:

I received outputs for each Log Analytics workspace that looked like this:

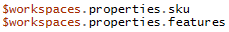

I then tried the following cmdlets to see if I could extract the right data:

Boom...I received outputs that made me think I could really build an inventory report to help companies out. Here's an example of the SKU output:

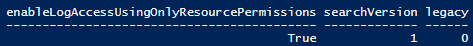

And here's an example of the features output:

Building the script took a bit of time and I finally landed on creating a custom PS Object. I did this because name, resource group, and location were easy to extract. SKU and the permissions model were more difficult and required going an extra layer deeper into dotted notation.

Now without further ado, here's the script:

If you'd like to copy/paste the actual script and steps, you can grab the script from GitHub as well.

The end results look something like this in the CSV file (I'm only showing a few of the results from running the script against my subscriptions):

With that, please stay tuned. The next blog post will cover how to switch the permissions model for Log Analytics workspaces in your environment + change the SKU for existing workspaces. Together, we'll optimize, optimize, optimize (and maybe even make your bosses happy).

Thank you for explaining how to use the ResourceGraph to pull from all accessible subs. This is handy information. I would note that currently, the Az.ResourceGraph module is not part of the Az umbrella install, so if someone wants to try this, they will need to install the module (Install-Module Az.ResourceGraph -Scope CurrentUser). Also, since the Az modules require PowerShell 5.1 or greater, I recommend using the PSCustomObject to return the results to the output stream. This will allow for multiple ways to save or output the results: $workspaces = Search-AzGraph -Query "where type =~ 'Microsoft.OperationalInsights/workspaces'"

ForEach ($workspace in $workspaces) {

[PSCustomObject]@{

Name = $workspace.name

Location = $workspace.location

ResourceGroup = $workspace.resourceGroup

Sku = $workspace.properties.sku

Features = $workspace.properties.features

}

}